Check your eligibility now & get in touch with a study center

CHECK ELIGIBILITY

Detailed Description

Simple Text Block

You can use content blocks to arrange your articles, large texts,

instructions. Combine these blocks with media blocks to add

illustrations and video tutorials. You can use various content blocks to

work with your text. Add quotations, lists, buttons. Select your text to

change its formatting or add links. You can use various content blocks

to work with your text. Add quotations, lists, buttons. Select your text

to change its formatting or add links.

Call 1800-9860-568 now to find out if you are eligible.

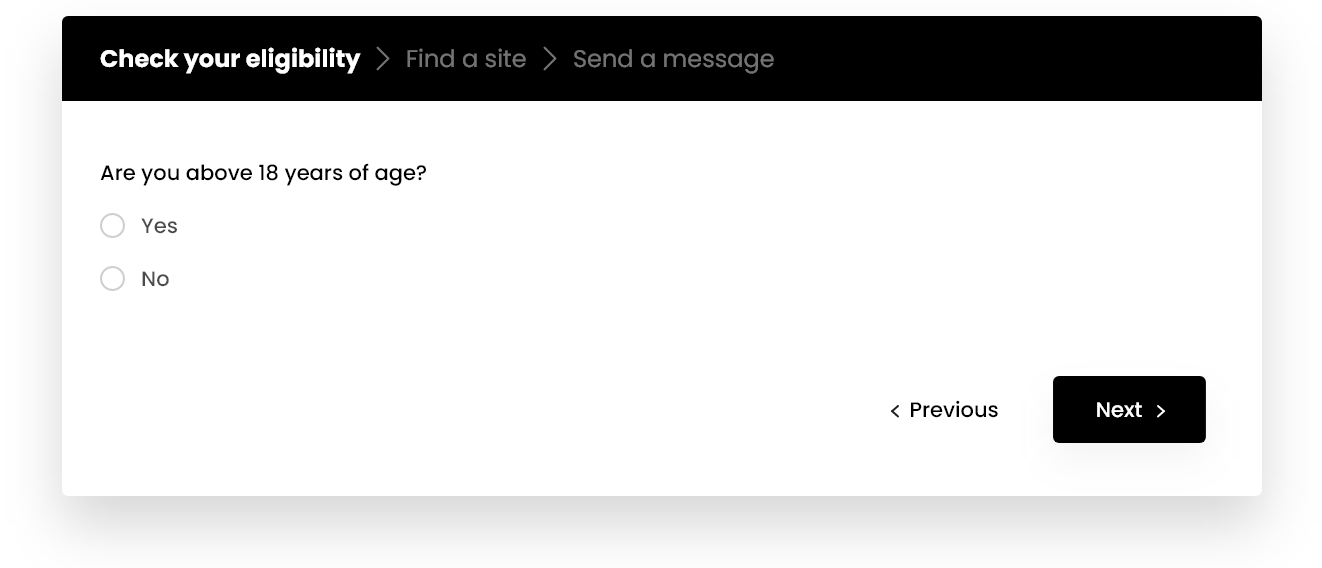

Check your eligibility

Take up this questionnaire to help us determine if you have symptoms

that are common to this clinical study. If you have the common

symptoms, you may be eligible to take part in the study.

18 - None

Gender

All

NCT ID

NCT07110688

Phase

-1

Status

Recruiting Now

Medical Condition

no Condition

How is Plaque Psoriasis treated?

Medical Condition

A short sentence to introduce what to expect in the about condition

section

Learn more

The Study

A short sentence to introduce what to expect in the about condition

section

Learn more

About Clinical Trials

A short sentence to introduce what to expect in the about condition

section

Learn moreYour Journey

01

Receiving the medication

You would receive etanercept (Enbrel) twice a week for 12 weeks and

then once a week for 12 weeks. Etanercept, as well as study related

medical care, is provided at no cost.

02

Visiting the study site

Study participation involves approximately 8 visits to your local

study center over 6 to 7 months.

03

Follow-up

There would also be a follow-up telephone call 30days after

completing the study. No visits are required after participation is

complete.